- Update on digital pathology and tissue-based AI algorithms in Switzerland

Digitisation of the pathology lab is occurring across Switzerland, at different pace. Although “going digital” has benefits, the challenges are also numerous and may hinder a smooth transition for all institutes. Importantly, only digital labs can take full advantage of Artificial Intelligence (AI) solutions. These AI tools are many, but few are certified as medical devices. They are increasingly complex, and in some cases lead to unexplainable outputs which cannot be verified by pathologists, thus opening questions related to trust, liability and responsibility of these AI solutions. In this short article, we present the current state of digital pathology in Switzerland, the challenges to becoming fully digital, and how the anticipated use of computational biomarker tests will be implicated.

Die Digitalisierung der Pathologie vollzieht sich in der Schweiz in unterschiedlichem Tempo. Obwohl die Digitalisierung Vorteile mit sich bringt, gibt es auch zahlreiche Herausforderungen, die einen reibungslosen Übergang für alle Institute behindern können. Wichtig ist, dass nur digitale Labore die Vorteile von Lösungen der Künstlichen Intelligenz (KI) voll ausschöpfen können. Von diesen KI-Tools gibt es viele, aber nur wenige sind als Medizinprodukte zertifiziert. Sie werden immer komplexer und führen in einigen Fällen zu unerklärlichen Ergebnissen, die von Pathologen nicht überprüft werden können, wodurch sich Fragen in Bezug auf Vertrauen, Haftung und Verantwortung dieser KI-Lösungen stellen. In diesem Artikel stellen wir den aktuellen Stand der digitalen Pathologie in der Schweiz vor, die Herausforderungen auf dem Weg zur vollständigen Digitalisierung und die Auswirkungen der zu erwartenden Verwendung von computergestützten Biomarker-Tests.

Keywords: digital pathology, AI, biomarkers

Introduction

Pathology as a medical discipline is undergoing a major transformation (1). Like radiology before it, pathology is “going digital”. Digitalisation of glass slides and digitization of lab processes are in progress across Switzerland and the AI market is exploding with tools promising to help pathologists in their daily work. In addition, generative AI and large language models (LLM) have demonstrated potential for accurate pathological diagnosis, even indicating best therapeutic strategies, when provided with histological images with text prompts (2, 3). Recently, foundational models have gained in popularity- these models are trained on millions of images, and are expected to be able detect patterns in tissues which can be further fine-tuned to produce even more accurate results for specific tasks (4). AI is everywhere in pathology, or so it seems, and surely must be ready for prime time. Is this really the case? Where do we stand with digital pathology and the implementation of AI in pathology in Switzerland today? Here, we evaluate this question by interrogating the advantages and disadvantages of going digital, the introduction of AI tools, as well as the promises and concerns of increasingly more complex tissue-based oncological biomarkers such as computational companion diagnostic tests.

Where does the digitisation of the pathology lab stand today?

To date, most pathology labs in Switzerland will have some degree of digitalisation of glass slides. Even with only one digital slide scanner, and no other specialized software, digital cases can be sent from one institution to the next for second opinions, and hence expert diagnoses for difficult cases can be obtained more rapidly. If in-house specialists are unavailable to make these diagnoses, scans can easily be shared with external specialists and reviewed digitally. Discussion of cases within multi-disciplinary teams is now facilitated and no longer requires a microscope or camera.

A fully digital lab has several advantages. As digital slides are used for diagnosis on the computer, previous cases from the same patients can be pulled up for comparison in a matter of seconds without searching and retrieving slides manually from the archive (5). Multiple slides and stains from the same cases can also be evaluated side-by-side by multi-panel viewing. Hence, a more comprehensive overview of all patient material can be made which facilitates diagnosis, more accurate measurements, and speedy annotation of structures. A truly digitized lab should consist of paperless workflows, reducing the potential for human error, and providing a high degree of standardisation. However, the biggest benefit of the digitisation of the pathology lab is the opportunity to work with computer-assisted diagnostic (CAD) tools, namely AI algorithms. Without this aspect, full digitisation for the purpose of replacing the microscope with a computer, is not an easy sell, neither to pathologists nor to hospital or university management.

Why are most pathology labs in Switzerland not already fully digitized?

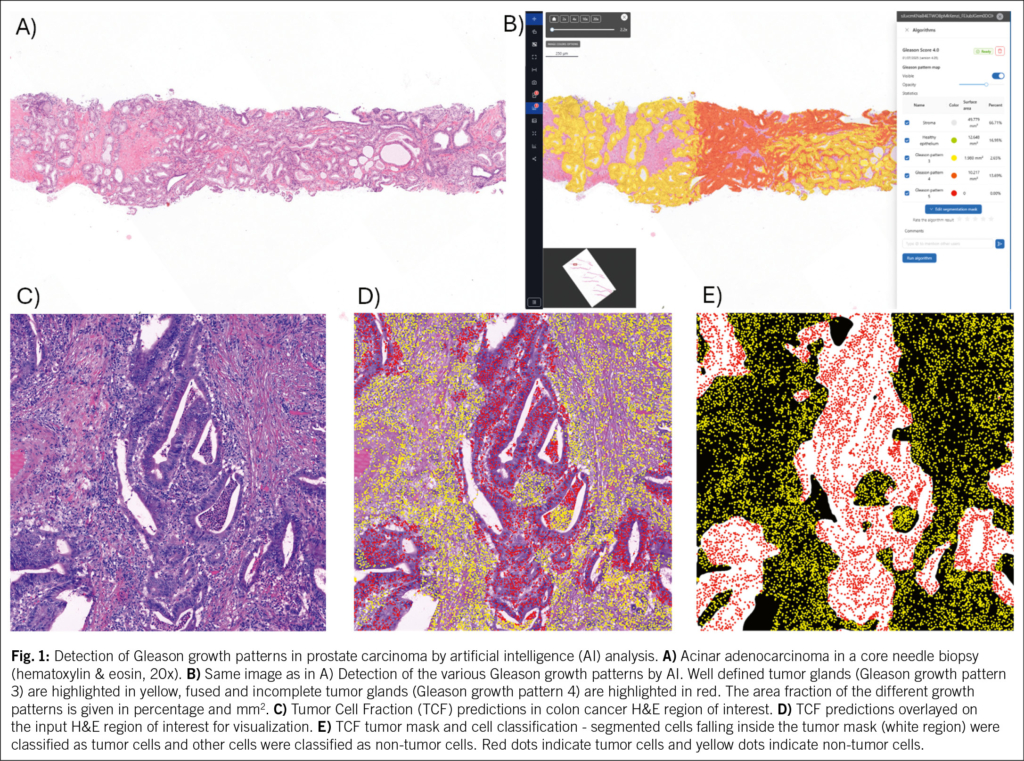

It is estimated that only around 5 % of all institutes of pathology in Switzerland are fully digital. Why so few? The digital transformation is essentially a large IT project, which requires a total re-evaluation and optimisation of all lab-related and diagnostic reporting processes, which include additional steps (6–8). Because expertise of the processes and the pathology domain are key, the digital transformation project must be led by the pathology departments themselves (Fig. 1). This requires resources and time, possibly more personnel, and a multi-disciplinary team of people, from IT and lab staff to quality assurance officers and pathologists (9). Software and hardware integrations are needed for connecting the various components of the digital pathology system, including slide scanner, lab information systems (LIS) or Image Management System (IMS). Such integrations involve conversations between different vendors, which can be arduous, and often lead to impractical interfaces that sabotage the desired workflow. Swiss guidelines for the clinical implementation of digital pathology have recently been published and can be used as a basis to address implementation challenges (10).

The equipment and software are expensive: a single conventional slide scanner costs around 250 000 CHF, and a moderately sized pathology institute may need anywhere between 4–6 of these simply to cover the daily workload. Additional costs for various lab equipment, IMS, IT integrations, monitors, devices for image navigation need to be considered, and most likely all together will require a tender bidding process. With an average scan approximating 2 GB in size, and a moderately sized institute of pathology diagnosing 2500 slides/day, image storage solutions and “compute” facilities whether Cloud-based or on premise, quickly accumulate massive costs and are an important financial burden (11). The initial investment in the digital transformation can easily cost several million CHF and needs to be sustainable (12). Where the money comes from is an important point and the business case is potentially currently unconvincing, as it will take time for the full impact and added value to become clear once systems have fully stabilized over time (13).

Digital pathology also requires pathologists to adjust their workflows or learn new techniques, which may be off-putting to MDs (14). The digital transformation profoundly affects pathologists’ way of working: the microscope, namely the main tool of the trade for the last 200 years is being replaced by a computer. In a few ways, digital scans cannot reproduce the very detailed, fine grained attributes of tissues visualized under the microscope (15). Most slide scanners today fail to accurately capture cytological specimens because these slides contain thicker materials that require z-stack scanning to visualize the full depth, but many scanners lack this capability. There are additionally some well-described diagnostic pitfalls that pathologists must be made aware of, including under-estimating areas of high-grade dysplasia and the detection of certain bacteria (15, 16). Taken together, for pathologists the digital transformation of the lab means: replacing the most important tool of their trade by something new, and interpreting images for diagnosis in a completely different way, yet still assuring the quality of their diagnoses, with no change in turn-around-time (TAT). Moreover, there is scant evidence to date from fully digitized labs regarding the impact of TATs employing digital diagnosis; definitive conclusions will likely only emerge in the future as labs finish implementing, refining, and comparatively analysing their pre/post digital approaches.

“Qual der Wahl”

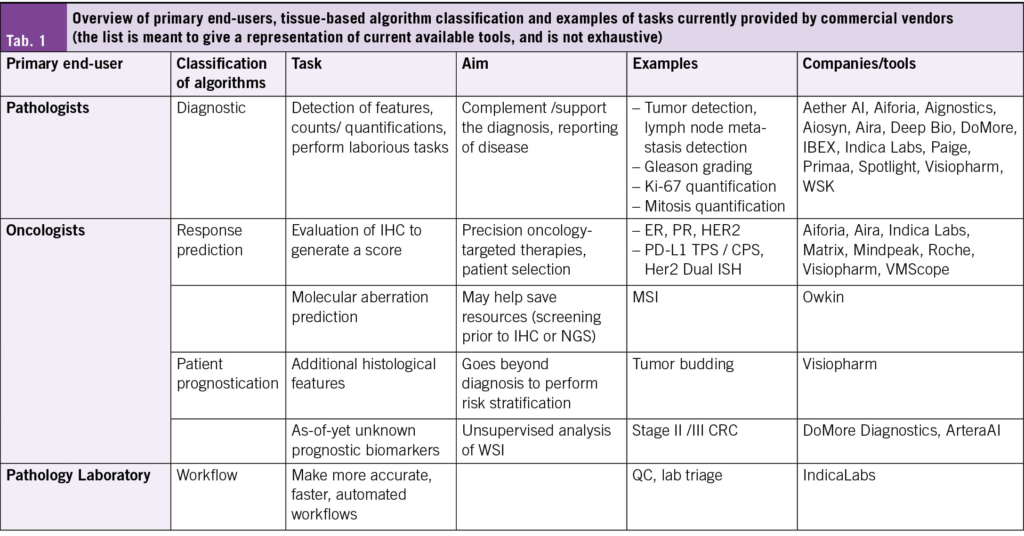

Only a fully digitized lab can consider implementing AI, without suffering from disruptive logistical issues in the lab. Dozens of AI vendors and start-up companies are offering similar tools for similar tasks, with similar accuracy, but at different costs or service packages (17). Most companies today can offer “Research Use Only” tools, while CE-IVD or CE-IVDR marked AI tools as medical devices are few and far between. One example of an FDA-approved AI tool for pathology is the prostate cancer detection and Gleason pattern grading tool from PAIGE. Breast cancer biomarker panels, tumor detection, and lymph node metastasis detection algorithms are offered by a variety of companies, and the portfolio of different tools being offered is limited (17) (Tab. 1). Hence, there is reticence among pathologists to commit to a single company. In addition, the financial incentive is low, in fact, there is currently no possibility of reimbursement of AI support for histopathology diagnosis in Switzerland. Moreover, there is little evidence that the much-debated TAT numbers are positively affected by the introduction of AI. Studies urgently need to be conducted in this regard to understand the influence of the use of AI on daily routine in real-life setting (not in research setting, which is most often the case now). If seamless integration of AI tools into workflows is achievable, then TAT improvements could become a reality- but the truth is, few examples of seamless integration exist.

The Ground Truth. Who’s Ground Truth?

Commercially available algorithms for diagnostic tasks (e.g. tumor detection, Ki-67 quantification, or mitosis detection) have until now been trained on datasets of hundreds to thousands of manually generated and curated annotations created by pathologists, with the underlying assumption that these annotations represent a “ground truth” and are an accurate representation of the feature to eventually be predicted (18). However, as seen in Faryna et al. some features suffer from inter-pathologist variability, which is also reflected in the AI tools, and raises the question of how to establish a reliable Ground Truth (19). If the Ground Truth is not reproducible, how can an AI algorithm be expected to outperform pathologists? In fact, in most cases, the accuracy of the algorithms reaches the accuracy of pathologists, showing them to be non-statistically inferior, and this is determined to be satisfactory. Pathologists are often eager to have AI support in “ambiguous” diagnostic cases. Unfortunately, ambiguous cases remain ambiguous because AI algorithms are trained on datasets annotated by medical experts; when experts disagree, this uncertainty is embedded in the dataset and ultimately propagated to the trained model. Moreover, although AI developers strive to produce unbiased and generalizable algorithms, the development of these is often hindered by lack of diversity in their datasets (scanners, protocols, patient demographics) (20–22). Also, various AI tools generate different results on the same case with an inter-obseerver agreement which is in the range of their human counterpart (19). Collaboration between institutes of pathology and AI vendors is therefore crucial to help alleviate this issue. For algorithms trained to quantify immunohistochemistry staining, like PD-L1, additional inter-lab variability (leading to lighter/darker staining, different number of cells in thicker or thinner sections) affects the performance of the algorithms, such that each algorithm should be additionally fine-tuned for that institute’s specific protocol, and thus to each institute’s “ground truth”. This leads to additional resource requirements for the institutes, which must now perform validation studies for each new algorithm applied.

Which stakeholders benefit from the output of today’s algorithms on the market?

The examples given above primarily complement or support pathologists’ primary diagnosis of disease. In an ideal world, these diagnostic tools would help make the labour-intensive or time-consuming tasks (lymph node metastasis screening, helicobacter pylori detection, mitosis quantification, counts of eosinophils, etc) easier and more efficient (23). The current Swiss view with regards to the clinical validation of image analysis and AI algorithms are summarized in Berezowska et al (24). Despite the issue of inter-observer variability, evidence shows that pathologists’ scores still merge towards the ground truth overall with the use of a computer-assisted diagnostic support tool, indicating a true benefit in using an AI algorithm (25). In addition to these, there are algorithms for immunohistochemical biomarkers which are evaluated to help oncologists select patients for certain targeted therapies, such as PD-L1 (e. g. TPS or CPS), ER, PR, HER2 or HER2 Dual or fluorescence In Situ Hybridisation (ISH). Although far from perfect, these algorithms can help guide the final scoring by pathologists. So, while some algorithms themselves may be generated using “black box” methods, the outputs or predictions are the histological features that pathologists want to report, and they can observe and verify these features.

The increasing complexity of biomarker scoring

The Immunoscore® gained popularity several years ago as a potential prognostic test in stage II and low-risk stage III colon cancers (26) and was eventually listed as a potential prognostic factor in the ESMO guidelines 2020 (27). This computational test not only quantifies the number of CD3+ and CD8+ T-cells, but also considers the location of the cells, namely within the tumor center or at the tumor invasion front. After evaluating the highest densities of these cells across multiple regions of interest, a final score emerges from Immunoscore 1 (low) to Immunoscore 4 (high) (28). This test is more complicated than a simple count of T-cell numbers, as it considers an additional piece of information, namely the location of these cells within the tumor microenvironment. The results are nonetheless verifiable by pathologists, who can correct or adjust the score prior to making their final reports.

An important problem arises when algorithms are trained to predict outcome variables, such as prognosis or therapy response, directly from whole slide images using end-to-end deep learning approaches, rather than using histological ground truth. One such example is by DoMore Diagnostics (29, 30). Here, a prognostic risk classification can be generated from a standard diagnostic H&E slide, which is first scanned and the image tessellated into smaller tiles. Five different deep learning algorithms run across each tile, at two magnifications. Each algorithm produces a probability of having a good prognosis, and at the end, the probabilities are aggregated into a final patient score of “Good”, “Uncertain” or “Poor” prognosis. This assay has been trained, tested and validated on thousands of patient samples including retrospective clinical trials. Unfortunately, the decisions for the predictions of the algorithms are not interpretable; they cannot be explained or verified. Even with the use of “attention mechanisms” (31), which aim to bring attention to the areas responsible for the decision-making process, it is impossible to understand the reason behind the decision. It is a true “black box”. Moreover, some companies are offering the possibility to submit digital scans online for remote processing and result generation. In this case, not only is the algorithm a black box, but the entire procedure of scan evaluation and reporting is as well.

Hence such algorithms are currently met with apprehension in the pathology community. These scores are uninterpretable, unexplainable, and unverifiable. Questions of responsibility, liability, and ethics prevent most pathologists from contemplating the use of such algorithms today.

The computational companion diagnostic test

Computational approaches to investigate biomarkers can outperform standard assessment by “eyeballing”. A recent example is the quantitative assessment of TROP2 as a predictive biomarker for patients with non-small cell lung cancer (NSCLC) treated with TROP2-directed antibody drug conjugate Datopotamab Deruxtecan (Dato-Dxd). This test, called the “Quantitative Continuous Score, QCS Normalized Membrane Ratio” is based on calculating the optical density of membranous staining and cytoplasmic staining of each tumor cell and then taking the ratio of optical density of membrane staining divided by that of membrane plus cytoplasmic staining together (32). If the value of the ratio in a cell is < 0.56 in more than 75 % of cells, the tissue is considered positive. Pathologists, unfortunately, will in most cases not be able to verify these findings to such precise degree, if at all. Although this assay is still in its early days, it has been proposed as a novel computational companion diagnostic test and has been included in prospective clinical trials for validation (33). It nonetheless underlines another major challenge when it comes to AI tools in pathology today: the QCS can only be performed if tissues are stained on immunostainers from a particular company, scanned on slide scanners from the same company, and analysed using the proprietary software. These constraints highlight the limited potential of the assay in its current form, as the slide scanning market is being dominated by more prominent scanner vendors in most digital labs outside of the research setting. Additionally, it is often observed that institutes of pathology will select only one vendor for slide scanners to keep lab processes simple and lean. Purchasing multiple scanners from different vendors “solely” to perform different companion diagnostic tests is unrealistic at best.

Where does it go from here?

As more and more institutes of pathology in Switzerland become digital, experience with AI tools will increase as well. Several questions emerge at this time: how should the pathology-oncology community deal with the arrival of computational companion diagnostic tests, whose outputs cannot be verified? What happens in the case of misdiagnosis, who is liable for it? Is it ethical to run such tests on patient materials without understanding how the test functions and cannot be verified? How might attitudes of pathologists and clinicians change with cumulative experience with these AI tools?

AI start-up companies currently flood the market. This situation will undoubtedly converge to a handful of vendors capable of meeting the demands of pathologists and importantly, the requirement of seamless integration of their products into full digital workflows. In-house algorithms will continue to be developed and shared. In light of this, the Swiss Consortium for Digital Pathology (SDiPath) has recently published guidelines for packaging and sharing AI tools between institutions in Switzerland (10). Important, urgent discussions must take place regarding reimbursement for the use of AI as decision support tools, otherwise the financial sustainability of implementing these lies in peril.

Special applications of LLMs or Vision Language Models (VLMs) will start to take their place in diagnostic workflows, as support tools using text and images, and are welcome especially in the context of rare diseases, or image matching / image retrieval (2, 34). A shift toward more multi-modal models, incorporating images as well as molecular, clinical, metabolomic, proteomic, and spatial information, can also be anticipated.

Conclusion

Digitisation in pathology impacts the foundations on which pathology diagnosis is carried out. The transformation has benefits, but also major challenges, including initial investments in hardware, storage, and software and the financial sustainability of these elements in addition to the AI tools. Interoperability issues within the workflow persist and can negatively impact TAT. Moreover, in view of the use of “black box” algorithms, the diagnostic community will be faced with how to handle these novel situations and be prepared to ask the difficult questions to identify the best way forward for patients.

Copyright

Aerzteverlag medinfo AG

University of Zurich

Faculty of Medicine

Pestalozzistrasse 3

8032 Zürich

Institute of Pathology, Cantonal Hospital Aarau

Tellstrasse 25

5001 Aarau

Department of Biomedical Engineering, Emory University and Georgia

Institute of Technology, Atlanta, USA.

Geneva University Hospitals

Department of Oncology, Division of Precision Oncology,

Department of Diagnostics, Division of Clinical Pathology

Centre Médical Universitaire

Rue Michel-Servet 1

1206 Genève

University of Bern

Institute of Tissue Medicine and Pathology (ITMP)

Murtenstrasse 31

3008 Bern

Die Autoren haben keine Interessenkonflikte im Zusammenhang mit diesem Artikel deklariert.

- Digitisation of the pathology lab is being implemented at different pace across Switzerland.

- «Going digital» is a disruptive process with many challenges to overcome, e.g. costs, personnel resources, IT integration with multiple vendor products, and adoption of a new microscope-free diagnostic reporting environment.

- Only completely digitized labs can truly benefit fully from implementing Artificial Intelligence solutions.

- The outputs of AI algorithms for tissue-based biomarkers becomes more complex, unexplainable and therefore less verifiable by pathologists, begging the question whether such AI tools will be accepted by pathologists and oncologists, alike.

1. C. Eloy et al., DPA-ESDIP-JSDP Task Force for Worldwide Adoption of Digital Pathology. J Pathol Inform 12, 51 (2021).

2. J. Clusmann et al., Prompt injection attacks on vision language models in oncology. Nat Commun 16, 1239 (2025).

3. M. Y. Lu et al., A multimodal generative AI copilot for human pathology. Nature 634, 466-473 (2024).

4. J. Lipkova, J. N. Kather, The age of foundation models. Nat Rev Clin Oncol 21, 769-770 (2024).

5. B. J. Williams, D. Bottoms, D. Treanor, Future-proofing pathology: the case for clinical adoption of digital pathology. J Clin Pathol 70, 1010-1018 (2017).

6. A. Eccher et al., Digital pathology structure and deployment in Veneto: a proof-of-concept study. Virchows Arch 485, 453-460 (2024).

7. F. Fraggetta et al., Best Practice Recommendations for the Implementation of a Digital Pathology Workflow in the Anatomic Pathology Laboratory by the European Society of Digital and Integrative Pathology (ESDIP). Diagnostics (Basel) 11, (2021).

8. J. Temprana-Salvador et al., DigiPatICS: Digital Pathology Transformation of the Catalan Health Institute Network of 8 Hospitals-Planification, Implementation, and Preliminary Results. Diagnostics (Basel) 12, (2022).

9. MedMedia. (2019).

10. A. Janowczyk et al., Swiss digital pathology recommendations: results from a Delphi process conducted by the Swiss Digital Pathology Consortium of the Swiss Society of Pathology. Virchows Arch 485, 13-30 (2024).

11. A. Laurinavicius, P. Raslavicus, Consequences of „going digital“ for pathology professionals – entering the cloud. Stud Health Technol Inform 179, 62-67 (2012).

12. B. J. Williams, D. Bottoms, D. Clark, D. Treanor, Future-proofing pathology part 2: building a business case for digital pathology. J Clin Pathol 72, 198-205 (2019).

13. X. Matias-Guiu et al., Implementing digital pathology: qualitative and financial insights from eight leading European laboratories. Virchows Arch, (2025).

14. J. E. M. Swillens et al., Pathologists‘ first opinions on barriers and facilitators of computational pathology adoption in oncological pathology: an international study. Oncogene 42, 2816-2827 (2023).

15. B. J. Williams, P. DaCosta, E. Goacher, D. Treanor, A Systematic Analysis of Discordant Diagnoses in Digital Pathology Compared With Light Microscopy. Arch Pathol Lab Med 141, 1712-1718 (2017).

16. E. Goacher, R. Randell, B. Williams, D. Treanor, The Diagnostic Concordance of Whole Slide Imaging and Light Microscopy: A Systematic Review. Arch Pathol Lab Med 141, 151-161 (2017).

17. EMPAIA. (2025).

18. D. Montezuma et al., Annotation Practices in Computational Pathology: A European Society of Digital and Integrative Pathology (ESDIP) Survey Study. Lab Invest 105, 102203 (2024).

19. K. Faryna et al., Evaluation of Artificial Intelligence-Based Gleason Grading Algorithms „in the Wild“. Mod Pathol 37, 100563 (2024).

20. A. Asilian Bidgoli, S. Rahnamayan, T. Dehkharghanian, A. Grami, H. R. Tizhoosh, Bias reduction in representation of histopathology images using deep feature selection. Sci Rep 12, 19994 (2022).

21. K. Nakagawa et al., AI in Pathology: What could possibly go wrong? Semin Diagn Pathol 40, 100-108 (2023).

22. A. Vaidya et al., Demographic bias in misdiagnosis by computational pathology models. Nat Med 30, 1174-1190 (2024).

23. M.A. Berbis et al., Computational pathology in 2030: a Delphi study forecasting the role of AI in pathology within the next decade. EBioMedicine 88, 104427 (2023).

24. S. Berezowska et al., Digital image analysis and artificial intelligence in pathology diagnostics-the Swiss view. Pathologie (Heidelb) 44, 222-224 (2023).

25. A. L. Frei et al., Pathologist Computer-Aided Diagnostic Scoring of Tumor Cell Fraction: A Swiss National Study. Mod Pathol 36, 100335 (2023).

26. J. Galon et al., Type, density, and location of immune cells within human colorectal tumors predict clinical outcome. Science 313, 1960-1964 (2006).

27. G. Argiles et al., Localised colon cancer: ESMO Clinical Practice Guidelines for diagnosis, treatment and follow-up. Ann Oncol 31, 1291-1305 (2020).

28. P. A. Ascierto, F. M. Marincola, B. A. Fox, J. Galon, No time to die: the consensus immunoscore for predicting survival and response to chemotherapy of locally advanced colon cancer patients in a multicenter international study. Oncoimmunology 9, 1826132 (2020).

29. A. Kleppe et al., A clinical decision support system optimising adjuvant chemotherapy for colorectal cancers by integrating deep learning and pathological staging markers: a development and validation study. Lancet Oncol 23, 1221-1232 (2022).

30. O. J. Skrede et al., Deep learning for prediction of colorectal cancer outcome: a discovery and validation study. Lancet 395, 350-360 (2020).

31. C. C. Atabansi et al., A survey of Transformer applications for histopathological image analysis: New developments and future directions. Biomed Eng Online 22, 96 (2023).

32. IASLC. (2024).

33. S. I. OncLive. (2024).

34. D. Ferber et al., In-context learning enables multimodal large language models to classify cancer pathology images. Nat Commun 15, 10104 (2024).

info@onco-suisse

- Vol. 15

- Ausgabe 2-3

- Mai 2025